Why Banning Facial Recognition is Not Enough

New policy is on the horizon for facial recognition technology. But how can the US incorporate key ethical concerns, such as racial bias?

A blog of the Science and Technology Innovation Program

New policy is on the horizon for facial recognition technology. But how can the US incorporate key ethical concerns, such as racial bias?

In an open letter to Congress published on June 8th 2020, IBM CEO Arvind Krishna announced the sunset of IBM’s general-purpose facial analysis program. Calling to reform a biased criminal justice system, Krishna expressed support for the George Floyd Justice in Policing Act of 2020 (H.R.7120). Among other provisions, this bill would prohibit the use of facial recognition technology to analyze images from in-car or body-worn video cameras. Krishna also called for new policies around bias testing and auditing in artificial intelligence (AI) systems, noting “now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.”

Other technology companies chimed in. Amazon implemented a one-year moratorium on police use of their facial recognition technologies, hoping this “might give Congress enough time to implement appropriate rules.” Microsoft also announced new limits. In an industry that rarely self-regulates, these decisions reveal the magnitude of issues relating to bias in facial recognition, and its impact on civil liberties in policing and criminal justice.

All three companies not only announced self-regulation, but actively called for policy reform. While policy reform is needed, simply banning facial recognition is not enough. Any strategy for US artificial intelligence (AI) should include a framework for considering ethical issues as a key component of all research and development priorities.

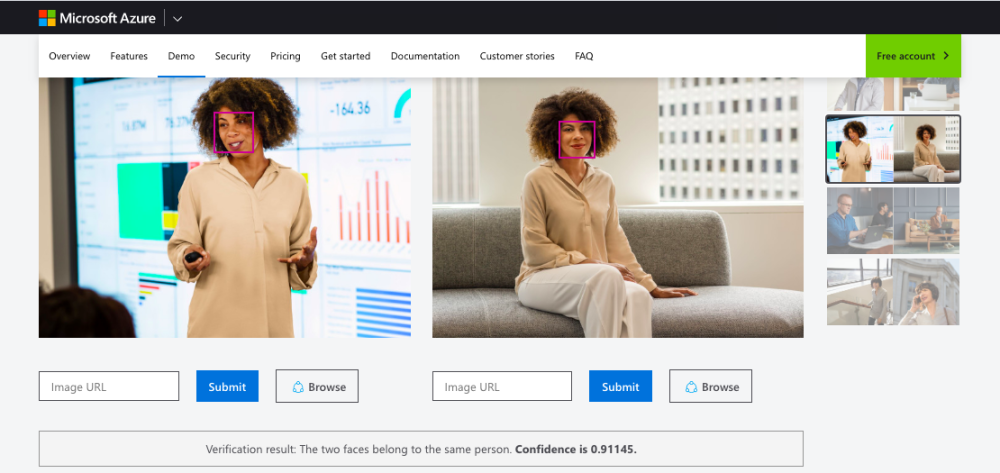

Facial recognition is a biometric technology, or a technology where the measurement of physiological characteristics including – but not limited to – fingerprints, iris patterns, or facial features are used to identify an individual. Facial recognition can be useful for identity verification through one-to-one matching. Such applications, which help confirm a single person’s identity, help consumers unlock their smartphones, or enable travelers to participate in automated programs for airport security screening or passport control.

One-to-many matching is also used to analyze images from body cameras. Within the US, images from body cams are typically compared to images in databases of criminal mugshots, though many states also use databases including DMV records. Due to advances in cloud storage, the feasibility of real-time one-to-many analysis is already being investigated by groups like Customs and Border Protection (CBP).

Facial recognition algorithms fail by making one of two errors. A false positive identifies a match between two images that do not depict the same individual. A false negative fails to detect a match when one exists. While both types of errors are problematic, false positives are particularly problematic when considered through the lens of civil rights concerns.

For example, the 2018 Gender Shades study evaluated the performance of different facial recognition algorithms. The authors reported strong demographic effects: darker-skinned women were misclassified by commercial algorithms with a rate as high as 34.7%, while the maximum error rate for white males was reported as 0.8%. While this research has been criticized for methodological issues, Gender Shades is still important for elevating the conversation around bias in facial recognition. The study also demonstrated the relevance of intersectionality, a theoretical framework that explores how different aspects of an individual’s identity, such as race and gender, can interact to create unique types of discrimination or privilege.

These findings have also been validated in other studies. In 2019, the National Institute of Standards and Technology (NIST) conducted a large-scale assessment of the performance of facial recognition related to demographic effects. In regard to racial differences, NIST found that false positives were highest in West African, East African, and East Asian Individuals, with the exception of algorithms developed in China. False positives were slightly higher for South Asian and Central American people, and lowest for Eastern Europeans. NIST also reported demographic effects related to gender and age. These findings are particularly concerning given that there is no federal standard, or agreed-upon threshold, that determines how accurate a facial recognition algorithm must be before it is used in law enforcement.

In the findings, Gender Shades isolated one important variable: training data. Researchers found that many benchmark training data sets were overwhelmingly biased towards lighter-skinned individuals. While NIST did not attempt to identify causation, the agency did suggest that attempts to mitigate demographic differentials could benefit from research on aspects including “threshold elevation, refined training, more diverse training data, and discovery of features with greater discriminative power.”

While recent conversations may focus on facial recognition, other applications of machine learning (ML) in criminal justice, including the use of risk assessment instruments (RAIs) such as the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) in parole decisions, are also under scrutiny. Research demonstrates that Blacks and non-white Hispanics are incarcerated at much higher rates than whites, are more likely to be incarcerated for the same behaviors, and are incarcerated at younger ages. When RAIs like COMPAS are trained on this data, they are likely to make predictions on recidivism that lead to biased outcomes that negatively (and unfairly) impact individuals.

The ethics of facial recognition also extend beyond bias. Citing privacy concerns, a handful of states have enacted legislation limiting commercial biometric identification. Illinois’ laws have held up to judicial scrutiny. In Patel vs. Facebook, which settled for $550 million, a class action lawsuit found Facebook’s use of facial recognition in violation of rights protected by the Illinois Biometric Information Privacy Act. This ruling was significant because it did not identify specific grievances or harms, but found that privacy violations are inherent to facial recognition.

Facial recognition technologies are just one small category of ML applications. And, while ethical landmines like bias and privacy challenge a wide range of AI and ML applications, the potential for these technologies to bring economic and social benefits to Americans is unmatched by any advance since the Internet. Programs, including the Trump Administration’s initiative on Artificial Intelligence for the American People, are accelerating AI through direct investment, reduction of regulatory barriers, workforce training, and strategic international cooperation. Recognizing both the broad value of ML and the accelerated pace of innovation, there is an urgent and compelling opportunity to move beyond bans to proactively explore a grand strategy or national action plan for AI that includes an ethical framework to help guide priorities in research, development, and use.

There are many opportunities to shape the ethical development of facial recognition and other ML technologies.

In the earliest stages, work on ethics often begins with principles. The United States has already contributed to and endorses OECD’s five values-based principles for responsible stewardship of trustworthy AI. The Department of Defense (DoD) issued five AI ethical principles to cover the unique challenges faced by defense and security communities. Most recently, the White House issued a draft memorandum proposing ten “legally binding” principles to help agencies formulate regulatory and non-regulatory approaches.

Principles can help identify guiding priorities. For example, one of OECD’s values-based principle states:

AI systems should be designed in a way that respects the rule of law, human rights, democratic values and diversity, and they should include appropriate safeguards – for example, enabling human intervention where necessary – to ensure a fair and just society. (OECD)

This principle grounds and provides context for understanding ethical issues associated with facial recognition technologies including bias in systems that struggle with demographic diversity, and the need to keep humans “in the loop” when criminal justice decisions are made.

But while principles codify expectations, they do not suggest how these expectations might be met. One step towards making generic principles more concrete is writing definitions, which may happen while crafting policy ( “Artificial intelligence” was formally defined in the John S. McCain National Defense Authorization Act for Fiscal Year 2019) or through standards development processes. A second OECD principle states, “There should be transparency and responsible disclosure around AI systems to ensure that people understand AI-based outcomes and can challenge them.” However, NIST notes that “without clear standards defining what algorithmic transparency actually is and how to measure it, it can be prohibitively difficult to objectively evaluate whether a particular AI system… meets expectations.” Further, NIST recommends that, beyond definitions, technical standards and guidance are needed to support transparency and other aspects related to trustworthy AI. In addition to standards, work on related tools, such as data sets and benchmarks-- challenge statements created to measure performance around strategically selected scenarios-- can capture other types of key performance indicators (KPIs).

Once defined, standards can then be used to inform technical or non-technical requirements for ethical AI in areas like facial recognition. For example, a technical requirement for a facial recognition system used in police body cams could be that the system does not display “matches” beyond a certain threshold. Non-technical requirements can look across the AI systems lifecycle to create provisions for development, procurement, and use. For example, algorithmic impact assessments are being used by US allies like Canada to help government agencies think through and proactively mitigate risk before a system is developed or deployed. Such strategies could help us think through the ethics of facial recognition, and can also be helpful for identifying and mitigating issues of bias or privacy in other ML and AI applications.

There are many calls to ban facial recognition technologies. Unfortunately, simply placing a moratorium on research and development efforts (whether voluntary, or backed by regulation) will address neither broader issues around bias in criminal justice, nor broader ethical issues such as privacy in facial recognition. As IBM’s Krishna suggests, now is the time to begin a national dialogue-- but not just about facial recognition. As innovation continues to accelerate, a grand strategy for AI that includes an ethical framework is critical for enabling us to support and reinforce American values, both domestically and on the global stage.

The Science and Technology Innovation Program (STIP) serves as the bridge between technologists, policymakers, industry, and global stakeholders. Read more